Last month, Steven J. Vaughan-Nichols of ZDNet alerted us that Linux 4.0 will provide support for “no-reboot patching.” The gist: When a security patch or other critical OS update comes out, you can apply it without rebooting.

While rebootless patching is convenient for everyone, it’s a game changer for some applications. For example, web and cloud hosting services normally require customers to experience some downtime while the OS infrastructure is upgraded; with rebootless patching, upgrades happen seamlessly. Or, imagine upgrades to systems hosting in-memory databases: Right now, you have to checkpoint the DB to stable storage, stop the system, upgrade it, restart it, read the data from stable storage, and restart service. Just the checkpointing and re-reading from disk could take tens of minutes. With rebootless patching, this disruption is avoided; cf. Facebook’s usage of a modified memcached that supports preserving state across updates.

I’m particularly excited by this announcement because I’ve been working on the general problem of updating running software, which I call dynamic software updating (DSU), for nearly 15 years. In this post, co-authored with my PhD student Luís Pina, I take a closer look at the challenge that DSU presents, showing that what Linux will support is still quite far from what we might hope for, but that ideas from the research community promise to get us closer to the ideal, both for operating systems and hopefully for many other applications as well.

Dynamic software updating: What is it?

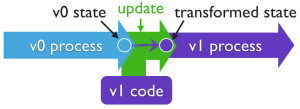

We have all experienced normal, or static, software updates: Download the new code, stop the affected program if it’s running, apply the patch, and restart the program. What makes dynamic software updates different is that they avoid the stop-and-restart part by also updating the running program’s execution state. This state consists of data, like linked tree and list structures that store our in-memory database, and control, like the execution stacks of active threads. The program code assumes the state adheres to a certain format and invariants, and therefore changing the code at run-time requires changing the execution state appropriately.

Consider an example. If in the updated program the entries of a hash table are extended with a timeout field, then a dynamic update needs to convert in-memory hashtable entries to now contain a timeout field; otherwise, when the updated code goes to access that field, it will behave unpredictably. Or, if the new code uses two threads to perform some functionality which in the old version requires only one thread, then we need to map the existing thread’s stack to an equivalent one for the new code, and start a new thread to handle the extracted functionality. Changes to in-memory data, like the first example, we call data migrations, while changes to control, like the second example, we call control migrations.

Rebootless patching in Linux 4.0

The rebootless patching support in Linux 4.0 is the descendant of two existing proposals, kpatch (from RedHat) and kGraft (from SUSE).[ref]The new live kernel patching support that Linux 4.0 introduces is a common core from kpatch and kGraft. The new API allows modules that contain patches to be loaded, listed, and removed. It also performs the low-level redirection to replace patched functions. It still remains to bring in some kind of safety checking, as kpatch and kGraft differ on this.[/ref] These two descend from earlier research, by Jeff Arnold and Frans Kaashoek, on a solution called Ksplice, which was bought by Oracle in 2011.

These solutions focus on dynamic changes to code. The basic approach to dynamically updating a function f is to overwrite the first few instructions of the current f to jump to its new version. This approach has the benefit that any references to f elsewhere in the code or data (i.e., as function pointers) will still work.

Activeness checking in kpatch

Function f cannot be running when this change takes place, for obvious reasons. Kpatch additionally requires that changed functions are not active, meaning they are not referenced by any process’s call stack at the time of an update (which it checks after pausing all processes). Why is this useful? The assumption is that the control state of the old and new kernel will be the same when no changed functions are active. As such, delaying the update until this condition is satisfied means the control state of the running kernel is valid for the new code. This makes it easier on the programmer.

Unfortunately, this assumption fails to account for the effects of prior execution of changed functions on the program’s data, even if the role of that data is the same between versions. Consider the following example (distilled from our prior work on dynamically updating OpenSSH daemons):

Old version:

1 2 3 4 5 6 7 8 9 10 | void go() { setup(); /* update here */ handle();}void setup() {}void handle() { global_ptr = init; x = (*global_ptr).field;} |

New version:

1 2 3 4 5 6 | void setup() { global_ptr = init;}void handle() { x = (*global_ptr).field;} |

Consider that a process is executing function go, which was not patched, right after function setup returned and before calling function handle. This means that the old version of function setup ran, which did not set the variable global_ptr. But after the update, the new version of function handle will execute, which will dereference the global variable and crash. A research study we did found that kpatch-style activeness checking is not sufficient to ensure that the control and data state is correct, and moreover can be quite restrictive.

Multi-version execution in kGraft

KGraft tries to address this problem by ensuring version consistency. That is, when performing a system call, the kernel either executes old code or new code, but not a mix of both. KGraft enforces version consistency on a per-process basis, so it is possible for one process to execute the new code while another process executes the old code. When a process makes a system call after the patch is installed, kGraft sets a “new universe” flag on that process. From that point on, that process will always use the patched code. KGraft uses an extra level of indirection called a “reality-check” to decide, at the entry of patched functions, which code to execute based on the process flag. Once the flag is set on all processes, kGraft drops the now-redundant indirection and jumps straight to the patched code.

The problem with multi-version execution is that processes running two different code versions could interact, e.g., through common data structures, and thereby potentially violate new (or outdated) invariants. This problem would be particularly acute if the old and new version changed a data structure’s format.[ref]The multi-version execution approach was considered, for process-level dynamic updates, by the POLUS dynamic updating system.[/ref]

(Lack of) data updates

None of these kernel live-patching mechanisms support updating data, at least not fully or easily. Ksplice proposed updating data using shadow data-structures (based on DynAMOS by Makris and Ryu). Given that the original data-structure has no space for new fields, the idea is to create a separate data-structure just for them and rewrite the original binary code to use the new structure when manipulating the new fields. This approach, however, introduces a lot of complexity (and opportunities for bugs) as the code is maintained and patched further, going ahead.

Full-featured DSU

Linux 4.0 DSU support is a far cry from supporting Vaughan-Nichols’ hope that “With Linux 4.0, you may never need to reboot your operating system again:” it is simply not flexible enough. The goal of DSU is to avoid stops-and-restarts; thus, ideally, any updates to a program we can make statically we can also make dynamically. I.e., we should be able to add new functions, change function types (e.g., to have new or different arguments), or modify data structures, e.g., by adding new data elements, breaking apart or changing the types of existing elements, adding and removing pointers, etc. But Linux 4.0’s rebootless patching support limits flexibility for the sake of better performance, backward-compatibility, and ease of use by the kernel programmer, even though the latter is not quite satisfying, as we have discussed: ironically, a “rebootless patch” could result in a crash, defeating the point!

The research community has been looking at how to support highly-flexible DSU for many years now.[ref]Good DSU surveys include Segal and Frieder’s 1993 paper, Ajmani’s 2002 survey, and the related work of the Kitsune journal paper.[/ref] We view whole-process DSU, pioneered by Makris and Bazzi’s UpStare system, as the most promising approach for user-space programs, owing to its flexibility. In this approach, the entirety of the new code is loaded into the memory of the running process and then control and data migrations directly update the execution state prior to, or even in conjunction with, subsequent execution that code.

Kitsune and Rubah

We have developed two systems that take the whole-process approach: Kitsune, for C programs, and Rubah, for Java programs. Both systems are flexible enough to dynamically apply years’ worth of release-level updates, and they have been used to dynamically update substantial applications such as redis, memcached, snort, H2, and Voldemort. Besides their flexibility, these systems do not impose any measurable overhead on normal execution. This is because the whole-process approach allows the code to be fully optimized internally — such optimizations would be inhibited by added levels of indirection (like trampolines) and spatial non-locality common in per-function updating approaches.[ref]Note that Upstare imposes substantial execution overhead because it compiles the program specially to perform stack re-winding, for control migration; Kitsune and Rubah favor programmer-provided support, which turns out to be much more efficient.[/ref]

The downside of both approaches is additional programmer work in supporting both control and data migration.

First, they require the programmer to add update points to the program. These are points at which the program polls to see whether a dynamic update is available, and if so will start applying it. Programs typically have only a handful of update points, and they are naturally placed at the start of long-running loops, when invariants are established and/or events have been fully handled.

Second, the programmer must define state transformation functions which indicate how data from an old version of a type/class should be used to initialize the updated version. For example, the state transformer might provide the default value for a new field. Fortunately, the process of finding and updating updated data values is automated. Kitsune updates all data at once, using a garbage collector-style mechanism. Rubah can do likewise, but also supports on-the-fly data migration, updating each outdated object when the new code first accesses it after the update. Both systems provide simple automation for writing state transformers, too, but sometimes the programmer needs to get involved.

Third, the programmer must modify the program to support control-flow migration between versions. Kitsune and Rubah start running the new program from the equivalent threads’ entry points (after data is migrated, or initiated in the on-the-flly case). To avoid re-running initialization code, the program can skip it, conditioned on being in updating mode; this mode is disabled once the equivalent update point is reached in the new program. We find that making control migration changes to the program is relatively simple because update points tend to be shallow in the control flow graph, and initialization code is often skipped en masse. For example, redis required only 2 extra LOC, and memcached required 9 LOC. Even better, this code rarely changes, so it is basically a one-time effort, rather than a per-update effort.

In the end, this work amounts to only hundreds of lines of code (compared to tens or hundreds of thousands of lines in an application), and much of the work is done once. And the benefit is full-featured dynamic updates with excellent performance.

What’s next?

Is it possible to apply the techniques from whole-process DSU to the OS kernel? One possibility is to apply “whole-virtual machine” updates—virtual machines are to virtual machine monitors (VMMs) what processes are to operating systems. Indeed, a recent criticism of the Linux 4.0 DSU support points to this direction: “Rather than trying to patch a running kernel …, why not just save the entire state of the system, boot into an entirely new kernel, then restore the previous state on top of the new kernel?”

The idea of non-stop operation is appealing outside of standalone user programs and OS kernels. What about updating elements of a distributed system in a way that the communication protocol is changed? Or, what about updating the schema and contents of a database (so-called “schema migration”) while migrating the applications that use it (e.g., and avoid the 22 hour downtime experienced by Mediawiki)? We can also imagine updating cloud-based web applications—the updates can be pushed silently to the users even while the applications are in use. All of these changes would facilitate more ready application of functionality or performance improvements, and of security fixes. Once you realize that dynamic updates are not that different from static ones—they just require a little more work to update the execution state—you realize that the benefit of non-stop operation is very much within reach.

I had no idea there was something like DSU out there. Very informative. Thank you for this.

This reminds me of the Erlang’s hot code replacement, which involves migrating data to a compatible new version before migrating the control flow.

Good observation. Erlang’s handles per-module code changes, and it’s amenable to the same sort of control migration patterns I talk about in the post. However, Erlang does not provide native support for migrating data — it’s entirely up to the programmer to find and transform existing data. Since that’s not always easy to do (e.g., how would you modify messages sitting in process mailboxes?), I suspect that practical evolutions end up being more constrained than they would be with Rubah and/or Kitsune.

I think the new function must be backward compatible with the messages still in the mailboxes.

Also relevant is Common Lisp’s update-instance-for-redefined-class protocol. My experience with trying to make it to work with ASDF 2 shows that while it is possible, it requires a lot of work to get just right, getting comprehensive test coverage is very hard, various compiler optimizations (inlining, mostly) must be disabled, and frankly even when the tools help you infinitely more than in most other languages (as they do with Common Lisp), it still help you infinitely less than you’d need to fully automate safety.

In practice, after having disabled inlining, you need any new version of any upgraded function to be fully compatible with the old calling convention — and incompatibility requires a name change (note that “name” in this context may include version, if that’s how your linker works), and that data structures must be compatible with old functions until all old versions have been removed. My experience with schema upgrades in an object database also show that a complete upgrade in the general case requires several “phases” with intermediate versions that can deal with both the previous and next schema and, incrementally build new representations and/or indexes for the new code as the old code is running, etc. — the code for the various phases can be largely automated if you use a “monotonic” schema model, but there again, when you write the code, it’s your responsibility to make it support the transitions correctly, and if you want to be able to upgrade arbitrary old versions, it can be “interesting”, and/or you will have to keep intermediate (yet sufficiently non-buggy) versions of the code around to reproduce old transitions. Making it work across several branches and merges of the schema rather than a linear history is even more “interesting”. It’s HELL, HELL, HELL.

Great points! In a sense, these issues arise because only a particular mechanism — code updating — is provided, and it’s left to the programmer to (figure out how to) use it to support application-level evolution. Ours and others’ DSU work attempts to support on-line, application-level evolution directly, by providing sufficient additional mechanisms and processes.

Pingback: BullFrog: Online Schema Migration, On Demand - The PL Enthusiast